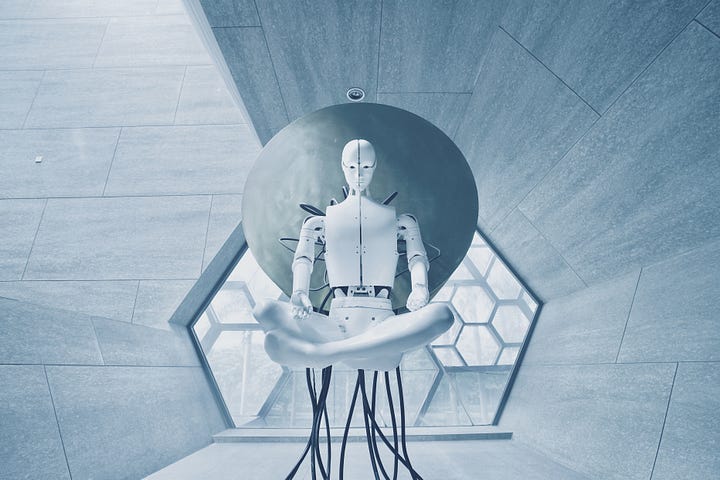

[DDIntel] The AI Scare - 3rd AI Winter Ahead?

Tech CEOs such as Elon Musk are hitting the brakes on AI, but can you blame them? The potential consequences for the economy and the world could be dire - if we don’t take a bit of time to plan ahead. The insane speed of adoption of ChatGPT and AI in general is like unleashing a horde of Terminators on the job market, lea…

Keep reading with a 7-day free trial

Subscribe to DDIntel to keep reading this post and get 7 days of free access to the full post archives.